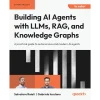

Building LLMs for Production: Enhancing LLM Abilities and Reliability with Prompting, Fine-Tuning, and RAG

Original price was: $89.99.$9.99Current price is: $9.99.

✔️ (PDF) • Pages : 609

“This is the most comprehensive textbook to date on building LLM applications – all essential topics in an AI Engineer’s toolkit.”

– Jerry Liu, Co-founder and CEO of LlamaIndex

TL;DR

(UPDATED ON OCTOBER 2024) With amazing feedback from industry leaders, this book is an end-to-end resource for anyone looking to enhance their skills or dive into the world of AI and develop their understanding of Generative AI and Large Language Models (LLMs). It explores various methods to adapt “foundational” LLMs to specific use cases with enhanced accuracy, reliability, and scalability. Written by over 10 people on our Team at Towards AI and curated by experts from Activeloop, LlamaIndex, Mila, and more, it is a roadmap to the tech stack of the future.

The book aims to guide developers through creating LLM products ready for production, leveraging the potential of AI across various industries. It is tailored for readers with an intermediate knowledge of Python.

What’s Inside this 470-page Book (Updated October 2024)?

- Hands-on Guide on LLMs, Prompting, Retrieval Augmented Generation (RAG) & Fine-tuning

- Roadmap for Building Production-Ready Applications using LLMs

- Fundamentals of LLM Theory

- Simple-to-Advanced LLM Techniques & Frameworks

- Code Projects with Real-World Applications

- Colab Notebooks that you can run right away

- Community access and our own AI Tutor

Table of Contents

- Chapter I Introduction to Large Language Models

- Chapter II LLM Architectures & Landscape

- Chapter III LLMs in Practice

- Chapter IV Introduction to Prompting

- Chapter V Retrieval-Augmented Generation

- Chapter VI Introduction to LangChain & LlamaIndex

- Chapter VII Prompting with LangChain

- Chapter VIII Indexes, Retrievers, and Data Preparation

- Chapter IX Advanced RAG

- Chapter X Agents

- Chapter XI Fine-Tuning

- Chapter XII Deployment and Optimization

What Experts Think About The Book

“A truly wonderful resource that develops understanding of LLMs from the ground up, from theory to code and modern frameworks. Grounds your knowledge in research trends and frameworks that develop your intuition around what’s coming. Highly recommend.”

– Pete Huang, Co-founder of The Neuron

“This book is filled with end-to-end explanations, examples, and comprehensive details. Louis and the Towards AI team have written an essential read for developers who want to expand their AI expertise and apply it to real-world challenges, making it a valuable addition to both personal and professional libraries.”

– Alex Volkov, AI Evangelist at Weights & Biases and Host of ThursdAI news

“This book is the most thorough overview of LLMs I’ve come across. An excellent primer for newcomers and a valuable reference for experienced practitioners.”

– Shaw Talebi, Founder of The Data Entrepreneurs, AI Educator and Advisor

Whether you’re looking to enhance your skills or dive into the world of AI for the first time as a programmer or software student, our book is for you. From the basics of LLMs to mastering fine-tuning and RAG for scalable, reliable AI applications, we guide you every step of the way.

17 reviews for Building LLMs for Production: Enhancing LLM Abilities and Reliability with Prompting, Fine-Tuning, and RAG

You must be logged in to post a review.

jb (verified owner) –

For those searching for an outstanding book about AI/LLMs this is it!

A truly wonderful, insightful, and valuable resource for you as you seek to learn and grow in this space. OUTSTANDING!

regards,

james b

Stephen Davies (verified owner) –

I’ve only just begun reading this book, and I can say that its style and approach are so far very nice. HOWEVER, be aware that the OpenAI world is changing so fast that even though this book was released earlier this year, it’s already out of date. In the very first “hello world” example in chapter 1, for instance, where you simply connect to the Python API, most of the lines of code have to change — I had to spend 30 minutes digging through the current Python docs to change it to work.

Bottom line: don’t get this book if you’re not a proficient programmer thinking you can just type in the code verbatim. You’ll have to do some sleuthing to keep up with the OpenAI firehose.

Joanna (verified owner) –

This book provides an overview on the more practical side of what you can do with LLMs. It’s easy to read and useful.

Joe Zhou (verified owner) –

This book covers a wide range of topics to get you up to speed with the practices of building LLMs. There are plenty of code examples for you to use. The organization of the book makes it easy to follow. Whether you want to jump-start your understanding of LLM applications or keep key concepts fresh, this book is an excellent resource.

The first edition is a good start, but I believe the second edition can be even better. Here is my feedback: Some of the material is not current due to the rapid advancements in the LLM ecosystem. For example, many Langchain code examples are written in Langchain Core instead of the now preferred Langchain Expression Language.

Although LlamaIndex founder Jerry Liu wrote the foreword recommending this book, it contains more code examples on LangChain than LlamaIndex. Therefore, if you prefer LlamaIndex over LangChain, this book may not meet your needs. I believe LlamaIndex deserves more coverage in the book.

Despite its title mentioning “production,” the book includes only one chapter on deployment. It should contain more chapters on this topic if it truly aims to cover production development. Chip recently wrote an excellent blog about building a GenAI platform (you should search and read it), addressing crucial aspects such as guardrails, routers, gateways, caching, and observability. These subjects should be included in a book about building LLMs for production, as it is not intended to merely create LLM prototypes.

The first edition is a good start, but the second edition can be even better. Seeing a solid resource for more people to learn about this area is wonderful. It could easily find a place on your bookshelf. However, this expansive field evolves quickly. You’ll need many books and more dynamic resources to stay sharp in building LLM applications.

RT (verified owner) –

There are plenty of resources out there on how to use language models, but very few that focus on building reliable, scalable systems around them. This book nails that gap. It’s not just about prompting or fine-tuning — it digs into the kind of infrastructure and techniques you’d actually need to deploy something real.

What I liked most:

• 🔹 Strong, practical emphasis on RAG pipelines — vector stores, retrievers, latency trade-offs, etc.

• 🔹 Good coverage of fine-tuning vs prompt engineering: when to use each, pros/cons, real examples

• 🔹 Includes production-minded concerns: evaluation, safety, cost, and latency

• 🔹 Doesn’t shy away from hard truths — like how brittle models can be in edge cases

Both authors have been active in the open-source AI space, and it shows. The examples are current, and the implementation advice feels grounded in real experience, not theory.

🧠 Heads-up:

This book assumes you’re already familiar with core transformer concepts and the basics of how LLMs work. It’s not a beginner intro — more of a guide for teams or individuals moving from “cool demo” to real-world app.

📦 TL;DR:

If you’re already comfortable using models like GPT or Claude and now want to build robust, useful, and shippable LLM-powered products, this is absolutely worth your time.